Why is the calibration confidence level in Confido only between 50 % and 100 %?

Confidence is a percentage expressing one's level of certainty regarding a specific statement (usually a prediction or estimate) that can turn out to be either true or false.

Confidence can range from 0% (absolute certainty the statement is false) through 50% (equally likely to be true or false, representing zero knowledge about the statement) to 100% (absolute certainty the statement is true).

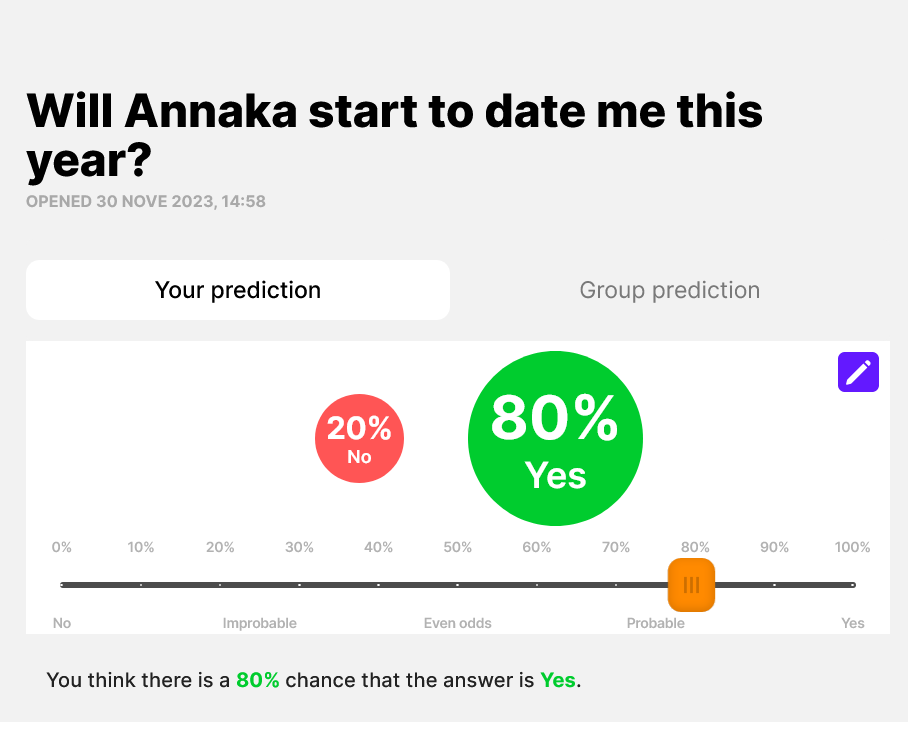

For purposes of calibration, we always consider statements with confidence greater than 50% ('beliefs')

For example a prediction of 40% on a yes/no question Will we finish the project on time? implies a 40% confidence in the statement We will finish the project on time and a 60% confidence in the statement We will finish the project late.

These confidences always add up to 100% and are basically two ways of talking about the same thing. We will consider only the latter when computing calibration.

Thus the confidence axis ranges only from 50% to 100%. On this range, greater numbers represent more certainty.(This would not be true on the whole 0% to 100% range, as both 0% and 100% represent absolute certainty.)

What is accuracy and confidence bracketing?

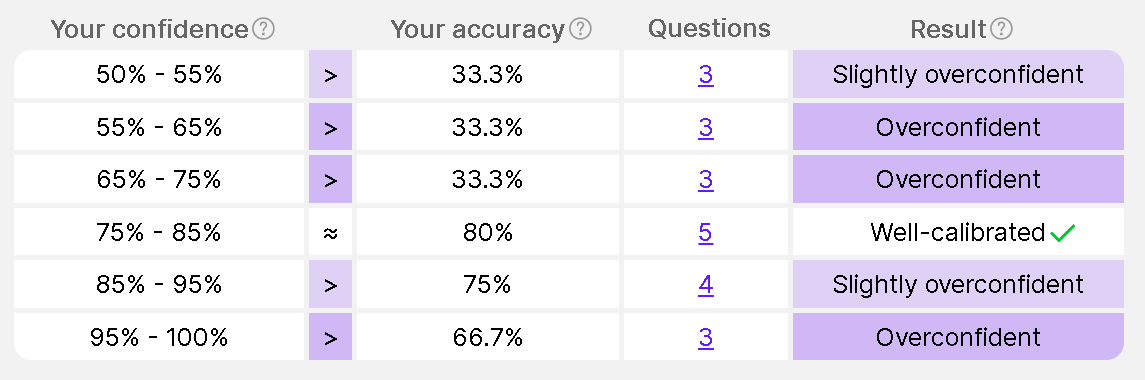

For a given confidence level, your accuracy is simply the proportion of your beliefs with that confidence that turn out to be correct.

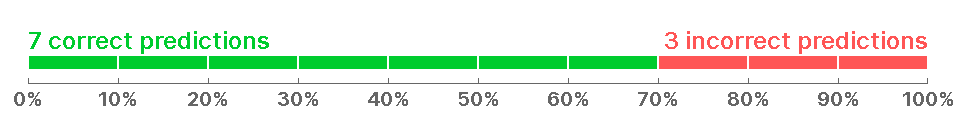

For example, if you made 10 predictions at 80% confidence and 7 of them turned out correct, your accuracy would be 70% (making you slightly overconfident).

As accuracy is an average metric, we need to aggregate enough predictions with a given confidence level to get a meaningful result. (For example, if you made only two 80% predictions, one correct, one not, for an accuracy of 50%, it is hard to say whether you are overconfident or just had bad luck.)

To achieve that, instead of computing accuracy separately for each exact confidence level (such as 80%, 81%, or even 80.5%), Confido groups predictions with similar confidence levels into confidence brackets and then computes accuracy for each bracket.

For example, the 80% bracket contains all predictions with confidence in the range from 75% to 85%. If you made four predictions with confidence levels 78%, 80%, 80%, 84% and three of them were correct, your confidence in the 80% bracket would be 75%.

(This makes the result less precise because of mixing beliefs with different confidence levels but this is compensated by averaging more predictions when computing accuracy, thus reducing effects of random chance.)

What does it mean to be well-calibrated, overconfident and underconfident?

Based on your results, your calibration in a given bracket can be classified as:

- Well-calibrated when your confidence tends to roughly match your accuracy. In that case, your confidence can be used as an indicator of how likely you are to be right for predictions where the answer is not yet known. This is what you strive for.

- Overconfident if your confidence tends to be larger than the resulting accuracy, i.e. you tend to be more strongly convinced of your beliefs than is warranted. Thus other people (and probably even you yourself) should take them with a grain of salt. Most people on Earth start out overconfident, so if that includes you, don't worry about it too much. There is a lot of room for improvement.

- Underconfident in the much rarer opposite case when you tend to understate your confidence, claim to know less than you actually do.

It is important to note that well-calibratedness, overconfidence and underconfidence are a spectrum. There is no sharp boundary between being well-calibrated and over-/under-confident.

Confido classifies your calibration into several discrete categories (well calibrated, slightly overconfident, overconfident, ...) but these are artificial and purely for easier visual orientation and color coding. The boundaries between these categories should not be taken very seriously.

What do I have to do to see my score?

In order for a prediction to be included in calibration computation, several conditions must hold:

- The question must have a score time set in its schedule. This determines the time point from which predictions are used for calibration computation.

- You must have made at least one prediction before the score time (if you updated it afterwards, the last update made before the score time is used for purposes of calibration and further updates are ignored).

- The question must have a resolution and be marked as resolved.

The score time is set by the question author or another moderator, either when creating the question or later when resolving it. It should be set based on a compromise between the forecasters having enough time to think about the question and the answer not yet being too obvious.

What about numeric/date questions?

Computing calibration requires converting your predictions into belief + confidence pairs, where the beliefs can be later judged as either correct or incorrect.

This is simple for yes/no questions. (We just take whichever of the two possible outcomes you consider more likely, plus the probability you assigned to that outcome.)

But for numeric questions, the situation is a bit more complicated, as there are basically infinitely many possible outcomes.

To address this, Confido converts each numeric prediction into multiple beliefs based on its confidence intervals.

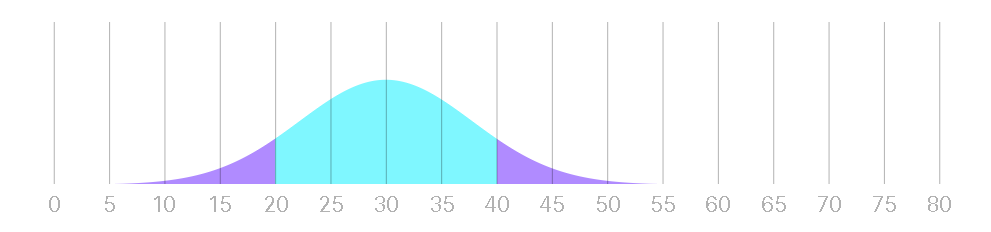

For example, consider a numeric prediction like this:

From such a prediction (probability distribution), Confido derives one belief based on a confidence interval for each confidence bracket:

The confidences are chosen as the midpoints of each bracket. The 80% confidence interval (bolded in the table) is what you enter when creating a prediction, the others are mathematically derived from the probability distribution.

Thus a single prediction is converted into 6 belief+confidence pairs (one in each confidence bracket), which are then each considered separately for purposes of calibration, as if you had made 6 separate yes/no predictions.

The confidences are chosen as the midpoints of each bracket. The 80% confidence interval (bolded in the table) is what you enter when creating a prediction, the others are mathematically derived from the probability distribution.

Thus a single prediction is converted into 6 belief+confidence pairs (one in each confidence bracket), which are then each considered separately for purposes of calibration, as if you had made 6 separate yes/no predictions.

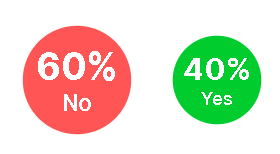

What is group calibration?

Apart from your individual calibration, Confido can also compute group calibration. But it is important to clarify what that means.

Group calibration is calibration computed based on the group prediction, i.e. calibration of a hypothetical individual whose predictions were equal to the group prediction for each question.

Group prediction is the average of all the individual predictions on a given question. For yes/no questions, it is simply the average of everyone's confidences.

For numeric/date questions, we are averaging probability distributions, which is beyond the scope of this explanation.

In either case, you can see the group prediction (if you have permissions to view it) on the Group prediction tab of each question.

It is important to note that group calibration is not an average of the individual calibrations.